- #Visual studio check text file encoding full#

- #Visual studio check text file encoding series#

- #Visual studio check text file encoding windows#

#Visual studio check text file encoding series#

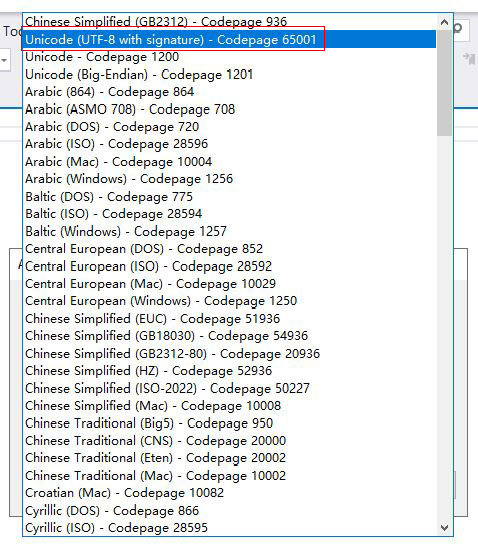

I wrote a short program to write out a string containing some accented characters to a series of text files, using the ANSI-encoding and the flavors of Unicode that have a BOM. These questions are not hard to answer - time for an experiment! The test NET to read the contents of a file in Unicode 16-bit mode, and the file actually contains a BOM for Unicode 32-bit? What happens if the byte order mark is not the one we expect? Suppose we tell. What happens when there is no byte order mark in the file? In other words: if a file does not contain a byte order mark, how should it be interpreted? More specifically, how does. However, while Byte Order Marks are useful, they're also optional. The following table lists the byte order marks supported by. Byte order marks may not be legal text, otherwise some completely ordinary text may be misinterpreted because it happens to start with a special sequence. These consist of a fixed sequence of bytes at the start of the file, marking it a, say, Unicode 32-bit Big Endian. I know I'm supposed to read a Unicode text file - but is it 16-bit or UTF-8? And if 16-bit, is it little endian or big endian? Fortunately Byte Order Marks (or Preambles) were introduced to distinguish the various Unicode styles. But with all these flavors, confusion is all too easy. So Unicode was designed to standardize text representations. (Except when a Byte Order Mark is used - read on!) This makes UTF-8 the encoding of choice in virtually all cases where text needs to be stored. the 8th bit is never used), UTF-8 is completely compatible with ANSI - the two can be used interchangeably. If a file contains no characters over 127 (i.e. The UTF-8 encoding is actuallly quite brilliant. There is another one, called UTF-7, but that's rarely used.

'Big Endian') there are two subflavors of the 16-bit and 32-bit types. So now we already have three flavors of Unicode: 32-bit, 16-bit and 8-bit! It gets worse: because of the different way processors store integers in memory ('Little Endian' vs.

#Visual studio check text file encoding full#

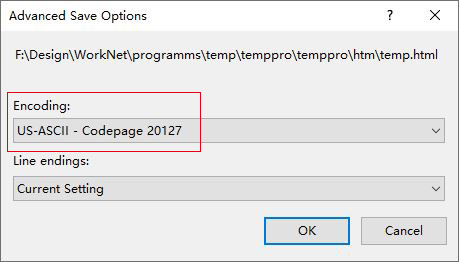

This allows the full 32-bit range of Unicode characters to appear in a text file, while only increasing the length of the file marginally. This problem was very neatly solved by UTF-8, which represents all characters below 128 as a single byte, and those above as a sequence of 2 to 5 bytes. Even that's pretty inefficient when writing to a text file - every file doubles in size when converted to Unicode! Moreover, most characters in a Western language text are actually in the ANSI character set, so almost half of the storage of a 16-bit Unicode file is left unused anyway. NET Framework (like most Unicode systems) uses 16 bits per character internally. 32 bits per character sounds great, but it requires a whopping four times the storage. NET Framework goes a step further: strings are always represented in Unicode internally, but when writing to file you have the option to specify which text encoding to use. (A first indication of a possible text encoding problem!) Visual Basic 5.0 made a complete switch: all strings were represented in Unicode in memory, but when writing to disc they were converted to ANSI.

#Visual studio check text file encoding windows#

Unicode support was added to languages (the 'wide' character in C++, for instance) and the Windows system DLLs started to show functions in two varieties: those ending in 'A' (for 'ANSI') and those ending in 'W' (for 'wide'). Thus all languages on Earth have a section of the Unicode alphabet, so it contains all kinds of Asian characters, but also Hebrew, Arabic, et cetera. In short, Unicode was designed to solve all these problems by simply creating a very, very large alphabet by using 32 bits per character. It had its problems, though: the lower half (the lower 7 bits) was well standardized, but accented characters in the upper half (128 and up) were not always supported in all applications in the same way, causing corruption of text when transferred between applications. In 16-bit Windows, a character was simply a byte (e.g. One of the biggest changes from 16-bit to 32-bit Windows was the introduction of Unicode as a way to represent characters and strings. For a while I purposefully ignored those, but recently I got bitten once again by an encoding problem, so I decided it was time to get to the bottom of this. NET method that deals with strings, text files, XML files, et cetera has an overload that allows you to specify a Text Encoding.

0 kommentar(er)

0 kommentar(er)